The need for accurate data and the subsequent increase of surveys have led to an issue known as survey fatigue. Simple and pragmatic good practices can help you fight this. Let’s explore some, from the straightforward to the most ambitious.

Hang in there because survey fatigue could only get worse

Collecting accurate feedback from learners has become essential to enable you to improve learning efficiency. To do so, the most straightforward tool remains the survey. Alas, a major issue arises here: learning is far from being the only situation that requires data. For example after a purchase, resolving a complaint, visiting a web page, are all circumstances that require a person’s feedback.

The resulting survey fatigue has become a persistent problem for L&D professionals. Constantly bombarded by surveys, respondents grow tired of answering, response rates drop or they respond inconsistently, producing data of questionable reliability. Of course, it is better to do nothing than have a 20%-response-rate survey!

Survey fatigue is avoidable

Our experience as leaders in learning evaluation has taught us some good practices that can greatly improve the respondent’s experience and help minimize survey fatigue.

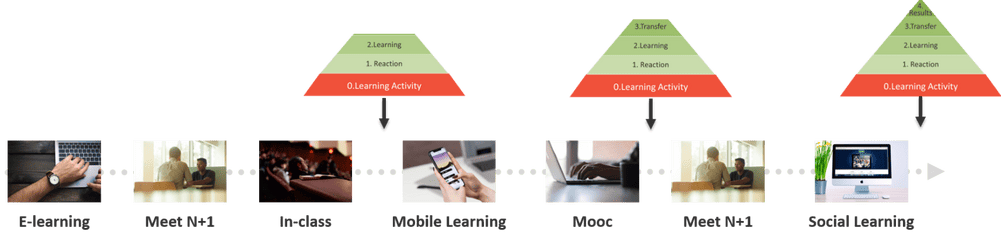

- Make it lean and easy: Keep in mind your respondents only have so much time to answer your survey so try to keep it short and limit the number of questions. Define the leanest evaluation process for each program so you do not over-survey participants. In the example below, you observe a 3-step evaluation process implemented in a 6-month continuous learning program.

Of course, surveys should be easily accessible from all devices with the minimum effort and clicks to be produced to reach it.

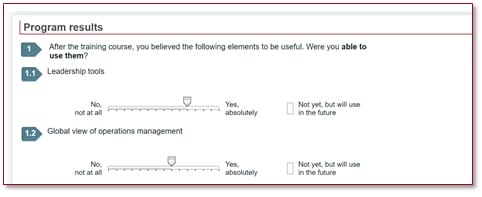

- Aim for automated customization: customization of the questionnaire content is one of the key success factors. But keep in mind that for a large scale roll-out, it is only feasible and cost-efficient when automated.Example 1: Adapt the questionnaires automatically depending on their type and content. Example 2: Include behavior/performance objectives in the follow-up questionnaire for a self-assessment by the learner, and a performance evaluation by the manager.You can also go further: in the example below, the respondent’s own answers from a previous questionnaire have been included. This allows them to link with their personal objectives and professional context, thus improving learning impact.

It is by applying these and other general principles (make it clear that answers are anonymous, use reminders, have a simple design…) that with US companies we achieve an average response rate of 77% for initial evaluations (Kirkpatrick level 1) and 64% for follow-up evaluations (Kirkpatrick level 3)

- Communicate about survey use: there’s no better incentive than feeling we’re doing something useful. So, tell your respondents their answers are being used to improve learning efficiency. Give them examples of courses that have been improved, created, or removed thanks to their feedback. Make it tangible for them.

- Provide rewards: if you’re asking them for something, you should give something in return. For example, respondents who complete our surveys receive a report allowing them to see where they stand compared to their training group. And the involvement of the participant can go much further. Let’s see how.

What’s next?

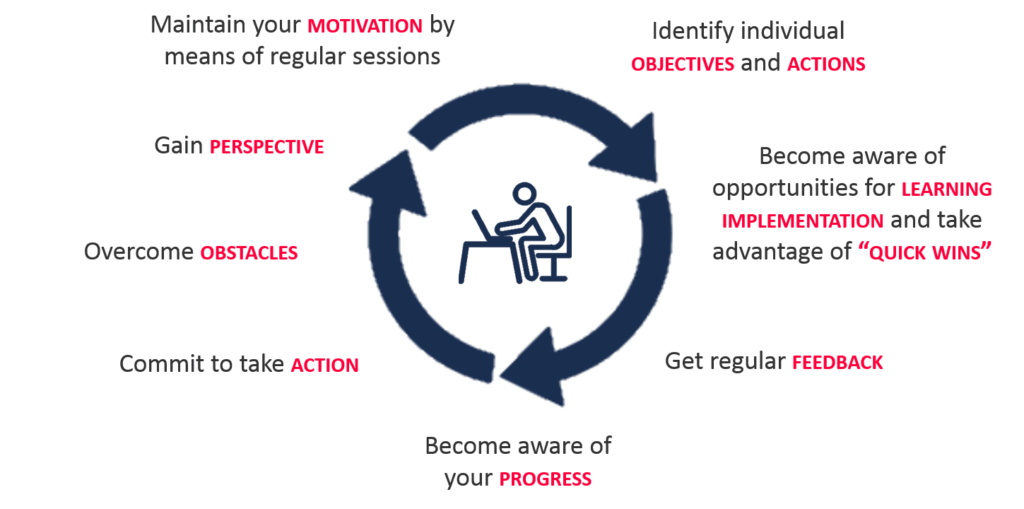

The above are all good practices against survey fatigue. Let’s see how to go much further for Kirkpatrick level 3 and level 4 evaluations. In this case, new digital post-training support solutions that help learners bridge the gap between learning and doing represent an innovative alternative to surveys in the very near future. Based on coaching techniques, these solutions are meant to be true companions and help each person reflect on what they have learnt, provide the support to build an action plan, identify opportunities to apply learning and overcome obstacles. Learning impact as well as learner engagement are therefore maximized.

Another significant perspective these programs yield is the ability to capture a unique amount of data about the learner’s journey. Processing automatically this non-structured data into actionable insights is a challenge that will enable us to replace surveys for learning impact measurement. At Docebo, we are launching the first pilots. If this project appeals to you, you know who to call!