This article is the fourth in a series on preparing L&D for the AI-powered workforce. Here, we explore how to enable performance through real-time, AI-augmented support systems that help employees execute inside dynamic, evolving workflows.

The rapid adoption of AI across companies often outpaces the establishment of strategic business alignment and effective governance. The result can lead to a scattered landscape of disparate and disconnected AI initiatives that promise transformation but too often deliver fragmentation. To mitigate the risks of fragmented AI and unlock its full transformative potential, organizations can maximize AI’s opportunity by adopting a unified, strategic approach to AI implementation. In this action, we focus on how to architect and orchestrate a cohesive and aligned approach to AI implementation across the enterprise with a disciplined framework that provides the clarity your workforce needs to innovate and execute with confidence.

When AI tools are integrated without a unified strategy for technology, people, and processes, the costs are significant and disconnected. The absence of centralized oversight for the technology results in siloed efforts, duplicate investments, and a lack of shared learning. This unmanaged proliferation can lead to three distinct and costly forms of fragmentation:

- Vertical fragmentation: Different organizational levels pursue disconnected AI initiatives, creating a misalignment between executive strategy and frontline implementation

- Horizontal fragmentation: Functional departments develop incompatible AI approaches, preventing cross-functional collaboration and knowledge transfer

- Technical fragmentation: The adoption of multiple AI tools without a unified competency framework forces employees to navigate contradictory and confusing workflows

Ultimately, this fragmentation amplifies operational and ethical risks, generating substantial business costs through reduced adoption, inconsistent application, and diminished return on AI investments. Organizations of almost any size often become siloed and disconnected. The current speed of AI adoption can also mask a deeper issue: there’s a tendency for functions to move fast within their domain and engage in isolated experimentation with AI initiatives. Quick experimentation can seem beneficial, but many disconnected AI initiatives offer limited business value without the benefit of a core AI infrastructure for the enterprise.

Building a cohesive AI foundation

In this early era of AI adoption and implementation, technical shortcomings are often isolated. Building AI as Infrastructure is crucial because AI adoption will fundamentally redesign workflows, decision-making, and nearly all ways of working. This enables function-specific AI initiatives to quickly execute on business strategies. Successfully building this infrastructure requires a multi-faceted commitment, starting with strategic vision and extending through data, governance, operational oversight, and responsible practices.

Strategic vision and executive sponsorship. Many organizations are struggling to realize AI’s full promise due to the fragmentation we discuss above. A recent Gartner survey highlights a significant functional strategic gap, revealing that a mere 23% of supply chain organizations possess a formal, documented AI strategy. The absence of a guiding vision can result in AI initiatives becoming trapped in pilot stages, leading to escalating expenses, reduced ROI, and a compromised competitive position. The paradox that executives face is the rapid pace at which employees are adopting AI is outpacing the development of governance and security policies, raising critical concerns about data integrity and AI misuse. We recommend that senior leaders align AI adoption and implementation with core business objectives and KPIs. This enables leadership and employee buy-in as a first step in creating a common language of AI for the enterprise. One company we worked with identified over 40 AI-related OKRs across multiple functions, with many interdependencies. The follow-up was an enterprise-wide training initiative to establish principles of AI usage. With multiple business objectives aligned with the function-specific OKRs combined with training, the company now has clear accountability measures to drive business value and proper resource allocation through a shared OKR process.

We recommend that senior leaders align AI adoption and implementation with core business objectives and KPIs.

Strong data governance and quality. Many large companies have multiple data sources and stores that lead to data fragmentation. Nearly all businesses report that poor data quality is harming their AI initiatives, yet AI is dependent on the quality of the data it’s trained on. In most enterprises, this foundational data is fragmented, outdated, inconsistent, or incomplete, which leads to the “garbage in, garbage out” problem. To create a consolidated and simplified single source of truth for all enterprise data, companies should consider establishing one enterprise data strategy. This strategy involves investing in data readiness (cleaning, consolidation, ownership) and implementing enterprise-wide data standards, policies, and continuous audits.

Prioritizing data quality as a strategic imperative is fundamental. Strong data governance is foundational to enabling the rapid growth of AI-related strategies. The financial impact of this issue is substantial, with poor data quality alone costing companies an average of $15 million per year. Establishing and enforcing these critical data standards is a primary function of a governance framework. In one large company, a centralized function provided data-as-a-service to the broader enterprise, ensuring smooth adoption of AI technology and processes in a consistent manner. This centralization helped build trustworthy AI solutions and enabled ease of use in gathering analytics and predictive insights.

Centralized oversight with cross-functional collaboration. Arguably, the most challenging aspect of building this foundation is also one of the most necessary: providing centralized oversight of AI-related implementation. This oversight reduces fragmented AI strategies, increases collaboration, produces a more complete view of spending and processes, and directly improves decision-making. Lack of centralized oversight impedes the ability to find inefficiencies and make sound choices, while a unified approach ensures transparency and accountability throughout the entire AI lifecycle.

Adopting unified AI solutions means integrating them deeply into core workflows rather than treating them as standalone applications. This approach directly mitigates technical fragmentation and isolated data silos. A lack of centralized oversight is more than just an inefficiency; it is a fundamental architectural flaw that prevents an organization from leveraging AI at scale. Viewing AI as Infrastructure is the solution. Without this approach, disparate AI solutions remain orphaned, creating the same data risks, concurrency issues, and auditability challenges that plagued legacy software. Neglecting this foundational integration leads to continuous, expensive failures and a permanent competitive disadvantage, while embracing it creates a truly connected and intelligent enterprise.

Prioritize adopting responsible AI practices. As we detailed in Action 2, the most significant impediments to successful AI adoption are often human and organizational. Cultural resistance, skill shortages, a lack of executive buy-in, and low employee trust create a bottleneck that prevents many AI initiatives from delivering on their full potential. A recent PwC survey indicates that “people — including senior leaders — are holding AI agents back.” Common concerns like cybersecurity and cost are often safe excuses, while the true underlying challenges are rooted in organizational change, integrating AI agents into workflows, and workforce adoption and trust. It is important to create an environment where AI-related pilot initiatives are encouraged so that teams can learn and adjust quickly.

Encouraging employees to raise doubts or concerns with AI, and providing comprehensive training, are essential steps to ensure a clear understanding of responsible AI principles and deepen trust in the technology. These internal efforts to build trust and responsibility are becoming even more critical, as the current fragmented regulatory landscape for AI is rapidly converging towards mandatory, global governance requirements. Gartner predicts that “by 2027, AI governance will become a requirement of all sovereign AI laws and regulations worldwide.” This signals a shift from voluntary ethical guidelines to legally binding compliance. This creates a strategic window of opportunity for early adopters of comprehensive AI governance.

A balanced governance process

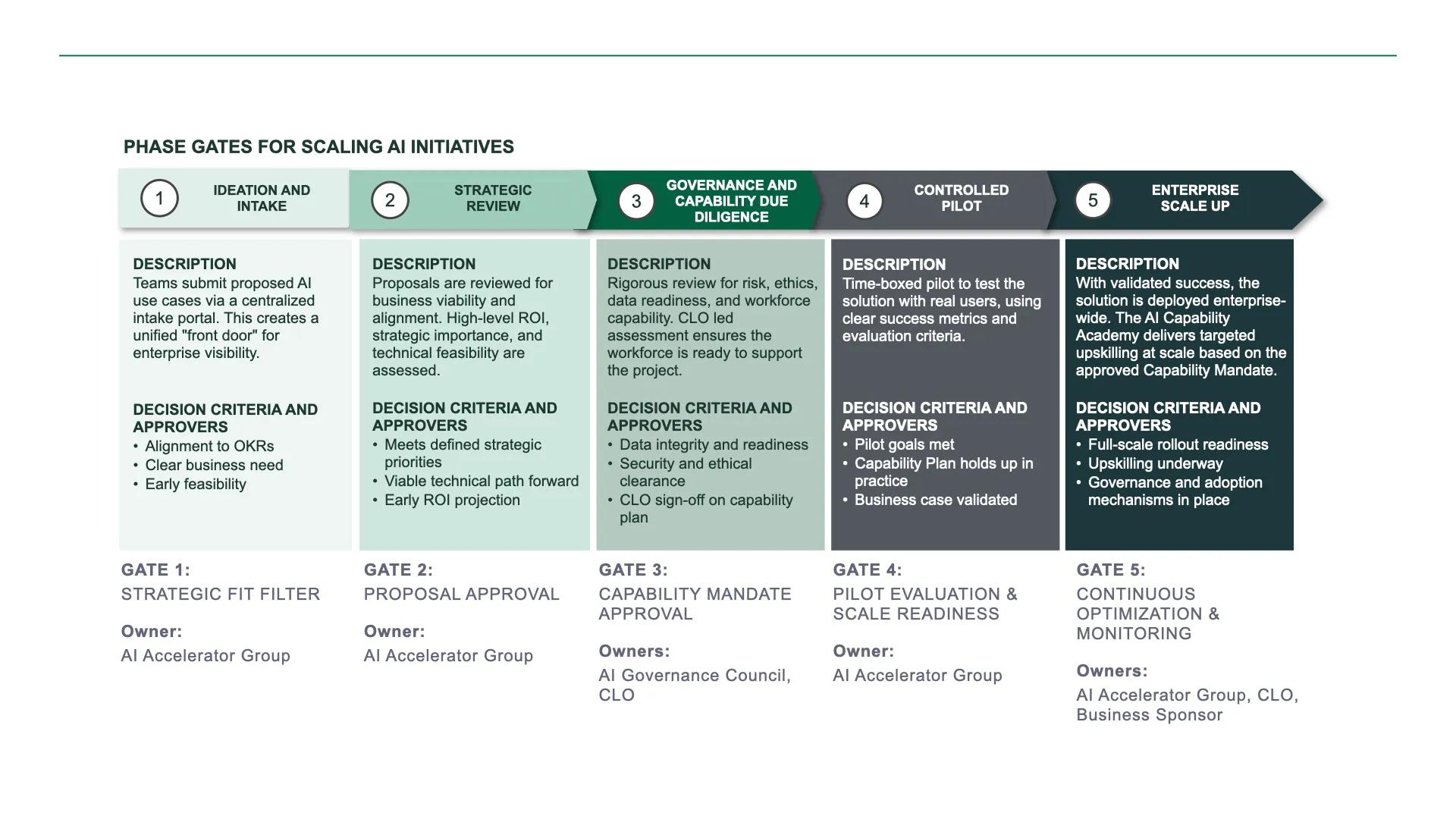

To prevent fragmentation and ensure maximum advantage with AI as Infrastructure, the key is to establish a balanced governance model. This is achieved by creating a formal group, let’s call it an AI Accelerator Group, an empowered and agile team of cross-functional leaders tasked with providing disciplined oversight without sacrificing speed. This group has a dual role: first, it directs high-stakes, enterprise-wide transformations; and second, it cultivates grassroots innovation by providing the tools and guardrails for functions to safely experiment. The group’s top-down strategic work is managed through a disciplined, five-phase gated process with defined owners:

- Phase 1: Ideation and intake. The process begins with a standardized but simple intake process where any team can submit a proposed AI use case. This creates a single “front door” for all new AI-driven ideas, providing immediate enterprise-wide visibility.

- Phase 2: Strategic review. The AI Accelerator Group assesses the proposal against core business criteria: What is the potential ROI? Does it directly support our stated quarterly and annual OKRs? Is it technically feasible with our current infrastructure?

- Phase 3: Governance and capability due diligence. A project that clears the strategic review undergoes rigorous risk, security, and ethical screening. The project is also assessed for Data Readiness and Integrity; a project with a sound business case can be vetoed if its underlying data foundation is weak or biased. This phase includes the most critical gating mechanism: Capability Due Diligence. Led by the CLO, this step answers the question: “Do we have the workforce readiness to make this successful?” No project receives pilot funding without the formal sign-off from the CLO on the workforce capability plan. This approved plan becomes the formal Capability Mandate, the very charter we detailed in Action 3 for translating business strategy into an actionable execution plan.

- Phase 4: Controlled pilot. With a solid business case and a capability plan in place, the project is greenlit for a time-boxed, controlled pilot with clear success metrics. This allows for real-world testing in a contained environment.

- Phase 5: Enterprise scaling. Upon successful completion of the pilot, the AI Accelerator Group approves the initiative for a full-scale rollout. This approval puts the formal Capability Mandate into action, deploying the targeted workforce readiness plan that was designed and signed-off in Phase 3. The AI Capability Academy is then tasked with delivering all necessary training and development, acting as the enterprise engine for building these critical skills at scale. Crucially, this heavyweight lifecycle does not apply to all ideas. The group’s second role is to enable bottom-up agility by maintaining a safe harbor for experimentation. Teams can innovate freely within this space, without formal approval, as long as they use pre-approved tools, adhere to clear data policies, and have completed the prerequisite AI literacy training. This creates the perfect environment for rapid, low-risk prototyping. The most successful of these grassroots projects can then be nominated for the formal governance process via a path to scale, ensuring the best ideas get the strategic backing they need to transform the enterprise.

This balanced governance process does more than manage initiatives; it replaces enterprise-wide uncertainty with predictability, reducing anxiety, and creating the psychological safety required for genuine experimentation.

L&D’s pivotal role in architecting cohesion

The CLO and their team are essential architects of the organization’s cohesive AI operating system. L&D’s contribution is multi-faceted: they are a key voice on the AI Accelerator Group, they lead the critical Capability Due Diligence phase, and their AI Capability Academy provides the license for employees to innovate in the safe harbor. This structure transforms L&D from a service function into a core pillar of the company’s strategic execution framework.

L&D is an essential architect of the organization’s AI operating system.

Action 4 next steps

- Establish clear, single-point ownership of the enterprise AI portfolio. If accountability is ambiguous, your first action is to designate a single executive leader. Recognizing this critical need, many organizations are creating a formal Chief AI Officer (CAIO) role, whose first mandate should be to charter and empower the AI Accelerator Group.

- How many “random acts of AI” are currently draining your budget? Mandate that all new AI initiatives enter through the single front door of centralized governance, while empowering grassroots innovation within a clearly defined safe harbor.

- Is your workforce readiness an input to your tech strategy, or an afterthought? Codify Capability Due Diligence as a mandatory, auditable gate in your project funding workflow. Making the CLO’s sign-off a prerequisite is the single most effective way to guarantee your AI investments will be adopted.

- Can you see your entire AI landscape on a single screen? If not, build a transparent portfolio dashboard that tracks all initiatives — both those in the formal lifecycle and those emerging from the safe harbor. This builds trust and helps you spot opportunities for cross-functional synergy.

- Is your L&D function funded to be strategic? Allocate budget for L&D to perform the front-end, strategic work of assessing capability implications and providing the foundational AI Literacy that enables safe, widespread experimentation.

Ultimately, a strategically aligned and governed AI strategy isn’t about control for its own sake; it’s about creating the disciplined conditions for breakthrough innovation and sustained market value. By bringing order to innovation, you unlock AI’s full potential. With a cohesive foundation in place, you are then empowered to explore how AI can revolutionize individual work.

Our next article will examines our fifth action, Enhance Traditional Training Through Augmented Performance Support, highlighting how this robust foundation enables real-time assistance directly in the flow of work.

About the author

Brandon Carson

Brandon Carson is a globally recognized leader in learning and currently serves as Chief Learning Officer at Docebo. He has held prominent roles such as CLO at Starbucks, where he led their global Learning Academies and the Future of Work practice, and Vice President of Learning and Leadership at Walmart, where he was responsible for global leader development and corporate onboarding. Brandon is the author of Learning In The Age of Immediacy: Five Factors For How We Connect, Communicate, and Get Work Done and L&D’s Playbook for the Digital Age, both from ATD Press. He is also the founder of L&D Cares, a nonprofit that offers no-cost coaching, mentoring, and resources to L&D professionals, empowering them to grow and thrive in their career.